Introduction

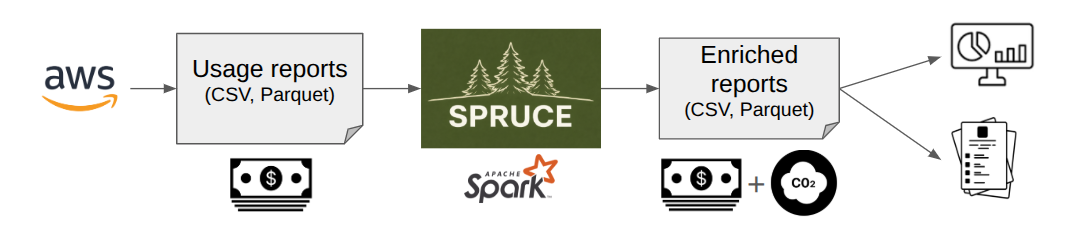

SPRUCE helps estimate the environmental impact of your cloud usage. By leveraging open source models and data, it enriches usage reports generated by cloud providers and allows you to build reports and visualisations. Having the GreenOps and FinOps data in the same place makes it easier to expose your costs and impacts side by side.

Please note that SPRUCE handles only CUR reports from AWS and not all their services are covered. However, most of the cost from a typical usage already gets estimates.

SPRUCE uses Apache Spark® to read and write the usage reports (typically in Parquet format) in a scalable way and, thanks to its modular approach, splits the enrichment of the data into configurable stages.

A typical sequence of stages would be:

- estimation of embodied emissions from the hardware

- estimation of energy used

- application of PUE and other overheads

- application of carbon intensity factors

Have a look at the methodology section for more details.

One of the benefits of using Apache Spark is that you can use EMR on AWS to enrich the CURs at scale without having to export or expose any of your data.

The code of the project is in our GitHub repo.

Spruce is licensed under the Apache License, Version 2.0.

Quick start using Docker 🐳

Prerequisites

You will need to have CUR reports as inputs. Those are generated via Data Exports and stored on S3 as Parquet files. See instructions on Generate Cost and Usage Reports.

For this tutorial, we will assume that you copied the S3 files to your local file system. You can do this with the AWS CLI

aws s3 cp s3://{bucket}/{prefix}/{data_export_name}/data/ curs --recursive

You will also need to have Docker installed.

With Docker

Pull the latest Docker image with

docker pull ghcr.io/digitalpebble/spruce

This retrieves a Docker image containing Apache Spark as well as the SPRUCE jar.

The command below processes the data locally by mounting the directories containing the CURs and output as volumes:

docker run -it -v $(pwd)/curs:/curs -v $(pwd)/output:/output --rm --name spruce --network host \

ghcr.io/digitalpebble/spruce \

/opt/spark/bin/spark-submit \

--class com.digitalpebble.spruce.SparkJob \

--driver-memory 4g \

--master 'local[*]' \

/usr/local/lib/spruce.jar \

-i /curs -o /output/enriched

The -i parameter specifies the location of the directory containing the CUR reports in Parquet format.

The -o parameter specifies the location of enriched Parquet files generated in output.

The option -c allows to specify a JSON configuration file to override the default settings.

The directory output contains an enriched copy of the input CURs. See Explore the results to understand what the output contains.

Quick start using Apache Spark

Instead of using a container, you can run SPRUCE directly on Apache Spark either locally or on a cluster.

Prerequisites

You will need to have CUR reports as inputs. Those are generated via DataExports and stored on S3 as Parquet files. See instructions on Generate Cost and Usage Reports.

For this tutorial, we will assume that you copied the S3 files to your local file system. You can do this with the AWS CLI

aws s3 cp s3://{bucket}/{prefix}/{data_export_name}/data/ curs --recursive

To run SPRUCE locally, you need Apache Spark installed and added to the $PATH.

Finally, you need the JAR containing the code and resources for SPRUCE. You can copy it from the latest release or alternatively, build from source, which requires Apache Maven and Java 17 or above.s

mvn clean package

Run on Apache Spark

If you downloaded a released jar, make sure the path matches its location.

spark-submit --class com.digitalpebble.spruce.SparkJob --driver-memory 8g ./target/spruce-*.jar -i ./curs -o ./output

The -i parameter specifies the location of the directory containing the CUR reports in Parquet format.

The -o parameter specifies the location of enriched Parquet files generated in output.

The option -c allows to specify a JSON configuration file to override the default settings.

The directory output contains an enriched copy of the input CURs. See Explore the results to understand what the output contains.

Explore the results

Using DuckDB locally (or Athena if the output was written to S3):

Breakdown by billing period

create table enriched_curs as select * from 'output/**/*.parquet';

select

BILLING_PERIOD,

round(sum(operational_emissions_co2eq_g) / 1000, 2) as co2_usage_kg,

round(sum(embodied_emissions_co2eq_g) / 1000, 2) as co2_embodied_kg,

round(sum(operational_energy_kwh),2) as energy_usage_kwh

from enriched_curs

group by BILLING_PERIOD

order by BILLING_PERIOD;

This should give an output similar to

| BILLING_PERIOD | co2_usage_kg | co2_embodied_kg | energy_usage_kwh |

|---|---|---|---|

| 2025-05 | 863.51 | 89.73 | 2029.15 |

| 2025-06 | 774.01 | 85.01 | 1811.98 |

| 2025-07 | 812.07 | 87.19 | 1901.13 |

| 2025-08 | 848.7 | 88.15 | 1982.56 |

| 2025-09 | 866.24 | 86.76 | 2017.36 |

Breakdown per product, service and operation

select line_item_product_code, product_servicecode, line_item_operation,

round(sum(operational_emissions_co2eq_g)/1000,2) as co2_usage_kg,

round(sum(embodied_emissions_co2eq_g)/1000, 2) as co2_embodied_kg,

round(sum(operational_energy_kwh),2) as energy_usage_kwh

from enriched_curs where operational_emissions_co2eq_g > 0.01

group by all

order by 4 desc, 5 desc, 6 desc, 2;

This should give an output similar to

| line_item_product_code | product_servicecode | line_item_operation | co2_usage_kg | co2_embodied_kg | energy_usage_kwh |

|---|---|---|---|---|---|

| AmazonECS | AmazonECS | FargateTask | 1499.93 | NULL | 3784.91 |

| AmazonEC2 | AmazonEC2 | RunInstances | 1365.7 | 433.57 | 3068.87 |

| AmazonS3 | AmazonS3 | GlacierInstantRetrievalStorage | 554.85 | NULL | 1224.13 |

| AmazonS3 | AmazonS3 | OneZoneIAStorage | 249.22 | NULL | 548.48 |

| AmazonS3 | AmazonS3 | StandardStorage | 210.57 | NULL | 469.54 |

| AmazonEC2 | AmazonEC2 | CreateVolume-Gp3 | 102.27 | NULL | 230.39 |

| AmazonEC2 | AmazonEC2 | RunInstances:SV001 | 66.41 | 3.27 | 146.15 |

| AmazonDocDB | AmazonDocDB | CreateCluster | 49.93 | NULL | 109.89 |

| AmazonS3 | AmazonS3 | IntelligentTieringAIAStorage | 17.02 | NULL | 37.47 |

| AmazonEC2 | AmazonEC2 | CreateVolume-Gp2 | 11.03 | NULL | 34.37 |

| AmazonS3 | AmazonS3 | StandardIAStorage | 9.02 | NULL | 19.85 |

| AmazonEC2 | AmazonEC2 | CreateSnapshot | 8.05 | NULL | 20.6 |

| AmazonECR | AmazonECR | TimedStorage-ByteHrs | 6.96 | NULL | 15.31 |

| AmazonEC2 | AWSDataTransfer | RunInstances | 2.8 | NULL | 6.25 |

| AmazonS3 | AmazonS3 | OneZoneIASizeOverhead | 2.23 | NULL | 4.9 |

| AmazonS3 | AWSDataTransfer | GetObjectForRepl | 1.84 | NULL | 4.06 |

| AmazonS3 | AWSDataTransfer | UploadPartForRepl | 1.66 | NULL | 3.64 |

| AmazonS3 | AmazonS3 | DeleteObject | 1.45 | NULL | 3.2 |

| AmazonMQ | AmazonMQ | CreateBroker:0001 | 1.16 | NULL | 2.55 |

| AmazonECR | AmazonECR | EUW2-TimedStorage-ByteHrs | 0.43 | NULL | 2.16 |

| AmazonS3 | AmazonS3 | StandardIASizeOverhead | 0.3 | NULL | 0.65 |

| AmazonS3 | AWSDataTransfer | PutObjectForRepl | 0.19 | NULL | 0.42 |

| AWSBackup | AWSBackup | Storage | 0.13 | NULL | 0.64 |

| AmazonS3 | AmazonS3GlacierDeepArchive | DeepArchiveStorage | 0.1 | NULL | 0.22 |

| AmazonS3 | AWSDataTransfer | PutObject | 0.08 | NULL | 0.17 |

| AmazonECR | AWSDataTransfer | downloadLayer | 0.07 | NULL | 0.19 |

| AmazonEC2 | AWSDataTransfer | PublicIP-In | 0.06 | NULL | 0.14 |

| AmazonCloudWatch | AmazonCloudWatch | HourlyStorageMetering | 0.02 | NULL | 0.05 |

| AmazonEFS | AmazonEFS | Storage | 0.01 | NULL | 0.03 |

Cost coverage

To measure the proportion of the costs for which emissions were calculated

select

round(covered * 100 / "total costs", 2) as percentage_costs_covered

from (

select

sum(line_item_unblended_cost) as "total costs",

sum(line_item_unblended_cost) filter (where operational_emissions_co2eq_g is not null) as covered

from

enriched_curs

where

line_item_line_item_type like '%Usage'

);

The figure will vary depending on the services you use. We have measured up to 77% coverage for some users.

Breakdown per region

with agg as (

select

region as region_code,

sum(operational_emissions_co2eq_g) as operational_emissions_g,

sum(embodied_emissions_co2eq_g) as embodied_emissions_g,

sum(operational_energy_kwh) as energy_kwh,

sum(pricing_public_on_demand_cost) as public_cost,

avg(carbon_intensity) as avg_carbon_intensity,

avg(power_usage_effectiveness) as pue

from enriched_curs

where operational_emissions_co2eq_g > 1

group by 1

)

select

region_code,

round(operational_emissions_g / 1000, 2) as co2_usage_kg,

round(energy_kwh, 2) as energy_usage_kwh,

round(avg_carbon_intensity, 2) as carbon_intensity,

round(pue,2) as pue,

round((operational_emissions_g + embodied_emissions_g) / public_cost, 2) as g_co2_per_dollar

from agg

order by energy_usage_kwh desc, co2_usage_kg desc, region_code desc;

g_co2_per_dollar being the total emissions (usage + embodied) divided by the public on demand cost.

Below is an example of what the results might look like.

| region_code | co2_usage_kg | energy_usage_kwh | carbon_intensity | pue | g_co2_per_dollar |

|---|---|---|---|---|---|

| us-east-1 | 6607.83 | 14542.69 | 400.33 | 1.13 | 30.85 |

| us-east-2 | 1150.96 | 2533.06 | 400.33 | 1.13 | 152.91 |

| eu-west-2 | 385.54 | 1940.72 | 175.03 | 1.14 | 27.15 |

Breakdown per user tag

User tags are how environmental impacts can be allocated to a business unit, team, product, environment etc… It is as fundamental for a GreenOps practice as it is for FinOps.

By enriching data at the finest possible level, SPRUCE allows to aggregate the impacts by the tags that are relevant for a given organisation. The syntax to do so for a tag cost_category_top_level would be for instance

select resource_tags['cost_category_top_level'],

round(sum(operational_energy_kwh),2) as energie_kwh,

round(sum(operational_emissions_co2eq_g) / 1000, 2) as operational_kg,

round(sum(embodied_emissions_co2eq_g) / 1000, 2) as embodied_kg

from enriched_curs

group by 1

order by 3 desc;

Methodology

SPRUCE uses third-party resources and models to estimate the environmental impact of cloud services. It enriches cost usage reports (CUR) with additional columns, allowing users to do GreenOps and build dashboards and reports.

Unlike the information provided by CSPs (Cloud Service Providers), SPRUCE gives total transparency on how the estimates are built.

The overall approach is as follows:

- Estimate the energy used per activity (e.g for X GB of data transferred, usage of an EC2 instance, storage etc…)

- Add overheads (e.g. PUE, WUE)

- Apply accurate carbon intensity factors - ideally for a specific location at a specific time

- Where possible, estimate the embodied carbon related to the activity

This is compliant with the SCI specification from the GreenSoftware Foundation.

The main columns added by SPRUCE are:

operational_energy_kwh: amount of energy in kWh needed for using the corresponding service.operational_emissions_co2eq_g: emissions of CO2 eq in grams from the energy usage.embodied_emissions_co2eq_g: emissions of CO2 eq in grams embodied in the hardware used by the service, i.e. how much did it take to produce it.

The total emissions for a service are operational_emissions_co2eq_g + embodied_emissions_co2eq_g.

Enrichment modules

SPRUCE generates the estimates above by chaining EnrichmentModules, each of them relying on columns found in the usage reports or produced by preceding modules.

For instance, the AverageCarbonIntensity.java module applies average carbon intensity factors to energy estimates based on the region in order to generate operational emissions.

The list of columns generated by the modules can be found in the SpruceColumn class.

The enrichment modules are listed and configured in a configuration file. If no configuration is specified, the default one is used. See Configure the modules for instructions on how to modify the enrichment modules.

Cloud Carbon Footprint

The following modules implement the heuristics from the Cloud Carbon Footprint project.

ccf.Storage

Provides an estimate of energy used for storage by applying a flat coefficient per Gb, following the approach used by the Cloud Carbon Footprint project. See methodology for more details.

Populates the column operational_energy_kwh.

ccf.Networking

Provides an estimate of energy used for networking in and out of data centres. Applies a flat coefficient of 0.001 kWh/Gb by default, see methodology for more details. The coefficient can be changed via configuration as shown in Configure the modules.

Populates the column operational_energy_kwh.

ccf.Accelerators

Provides an estimate of energy used accelerators, following the approach used by the Cloud Carbon Footprint project. See methodology for more details.

Populates the column operational_energy_kwh.

Boavizta

The following modules make use of the BoaviztAPI.

boavizta.BoaviztAPI

Provides an estimate of final energy used for computation (EC2, OpenSearch, RDS) as well as the related embodied emissions using the BoaviztAPI.

Populates the columns operational_energy_kwh, embodied_emissions_co2eq_g and embodied_adp_gsbeq.

From https://doc.api.boavizta.org/Explanations/impacts/

Abiotic Depletion Potential (ADP) is an environmental impact indicator. This category corresponds to mineral and resources used and is, in this sense, mainly influenced by the rate of resources extracted. The effect of this consumption on their depletion is estimated according to their availability stock at a global scale. This impact category is divided into two components: a material component and a fossil fuels component (we use a version of ADP which include both). This impact is expressed in grams of antimony equivalent (gSbeq).

Source: sciencedirect

boavizta.BoaviztAPIstatic

Similar to the previous module but does not get the information from an instance of the BoaviztAPI but from a static file generated from it. This makes it simpler to use SPRUCE.

electricitymaps.AverageCarbonIntensity

Adds average carbon intensity factors generated from ElectricityMaps’ 2024 datasets. The life-cycle emission factors are used.

Populates the columns carbon_intensity.

The mapping between the cloud regions IDs and the ElectricityMaps IDs comes from the GSF Realtime cloud project, see below.

Real Time Cloud

This module does not do any enrichment as such but provides access to data from the RTC project, see above.

RegionExtraction

Extracts the region information from the input and stores it in a standard location.

Populates the column region.

PUE

Uses the 2024 data published by AWS for Power Usage_Effectiveness to rows for which energy usage has been estimated. This provides a more accurate and up to date approach than the flat rate approach in the CCF methodology.

Populates the column power_usage_effectiveness.

Fargate

Provides an estimate of energy for the memory and vCPU usage of Fargate. The default coefficients are taken from the Tailpipe methodology.

Populates the column operational_energy_kwh.

OperationalEmissions

Computes operational emissions based on the energy usage, average carbon intensity factors and power_usage_effectiveness estimated by the preceding modules, based on the region.

Populates the columns operational_emissions_co2eq_g.

operational_emissions_co2eq_g is equal to operational_energy_kwh * carbon_intensity * power_usage_effectiveness.

Comparison with other open source tools

SPRUCE is part of a growing ecosystem of open source tools focused on measuring and reducing the environmental impact of cloud computing. This page compares SPRUCE with other notable open source projects in this space.

Cloud Carbon Footprint (CCF)

Cloud Carbon Footprint is an open source tool that provides cloud carbon emissions estimates.

Note: Cloud Carbon Footprint is no longer actively maintained. As a result, its data and methodology may be outdated. SPRUCE implements CCF’s core methodology but with actively maintained data sources and models.

Similarities

- Both tools estimate the carbon footprint of cloud usage

- Both support AWS (as well as GCP and Azure for CCF)

- Both use comparable methodologies for calculating operational emissions

- Both are open source and transparent about their calculation methods

- SPRUCE implements several modules based on CCF’s methodology (see Cloud Carbon Footprint modules)

Key Differences

| Feature | SPRUCE | Cloud Carbon Footprint |

|---|---|---|

| Architecture | Apache Spark-based for scalable data processing | Node.js application with web dashboard |

| Data Processing | Batch processing of Cost and Usage Reports (CUR) in Parquet format | Real-time API calls to cloud providers |

| Primary Use Case | Enrichment of existing usage reports for GreenOps + FinOps | Standalone dashboard for carbon tracking |

| Deployment | Runs on-premises or in the cloud (e.g., EMR) without exposing data | Requires credentials to query cloud provider APIs |

| Data Privacy | Processes data locally, no external API calls for core functionality | Requires cloud provider credentials |

| Modularity | Highly modular with configurable enrichment pipelines | Fixed calculation pipeline with configuration options |

| Output | Enriched Parquet/CSV files for custom analytics and visualization | Pre-built dashboard and recommendations |

| Embodied Carbon | Includes embodied emissions via Boavizta integration | Limited embodied carbon estimates |

| Scalability | Designed for large-scale data processing with Apache Spark | Suitable for smaller to medium deployments |

| Carbon Intensity | Uses ElectricityMaps average data (lifecycle emissions) | Default factors outdated |

| Maintenance Status | Actively maintained with regular updates | No longer actively maintained |

| Complexity | Easy to run on Docker | Challenging to set up |

When to Choose SPRUCE

- You want to combine GreenOps and FinOps data in a single workflow

- You need to process large volumes of historical CUR data

- You prefer to keep your usage data within your own infrastructure

- You want to build custom dashboards and reports with tools like DuckDB, Tableau, or PowerBI

- You need fine-grained control over the calculation methodology through configurable modules

- You want access to data at the lowest-possible granularity and control what gets displayed and how

CloudScanner

CloudScanner is an open source tool by Boavizta that focuses on estimating the environmental impact of cloud resources.

Similarities

- Both tools estimate the environmental impact of cloud usage

- Both are open source and transparent about their methodologies

- Both can work with AWS cloud resources

- Both use data from the BoaviztAPI

Key Differences

| Feature | SPRUCE | CloudScanner |

|---|---|---|

| Primary Purpose | Enrichment of Cost and Usage Reports (CUR) for GreenOps + FinOps | Direct resource scanning for environmental impact |

| Architecture | Apache Spark-based batch processing | Direct API-based resource scanning |

| Data Source | Cost and Usage Reports (CUR) in Parquet/CSV format | Live cloud resource inventory via cloud provider APIs |

| Scope | Focuses on AWS CUR data enrichment | Limited to EC2 |

| Integration | Enriches existing billing data for FinOps alignment | Standalone tool for environmental assessment |

| Scalability | Designed for large-scale historical data processing | Suitable for periodic resource audits |

| Output | Enriched reports in Parquet/CSV for custom analytics | Prometheus metrics and Grafana dashboard |

| Accuracy | Uses ElecticityMap intensity factors | Uses outdated factors from BoaviztAPI |

When to Choose SPRUCE

- You want to combine environmental impact with cost data from CUR reports

- You need to process large volumes of historical usage data

- You prefer batch processing over real-time scanning

- You want fine-grained control through configurable enrichment modules

- You need to integrate with existing FinOps workflows

- You want more accurate estimates and coverage beyond EC2

Other Related Tools

Kepler (Kubernetes Efficient Power Level Exporter)

Kepler is a CNCF project that exports energy-related metrics from Kubernetes clusters.

Key difference: Kepler focuses on real-time power consumption metrics at the container/pod level using eBPF, while SPRUCE focuses on enriching historical cost reports with carbon estimates at the service level.

Scaphandre

Scaphandre is a power consumption monitoring agent that can export metrics to various monitoring systems.

Key difference: Scaphandre provides real-time power measurements at the host/process level, while SPRUCE provides carbon estimates based on cloud usage patterns and billing data.

Please open an issue to suggest another project or an improvement to this page.

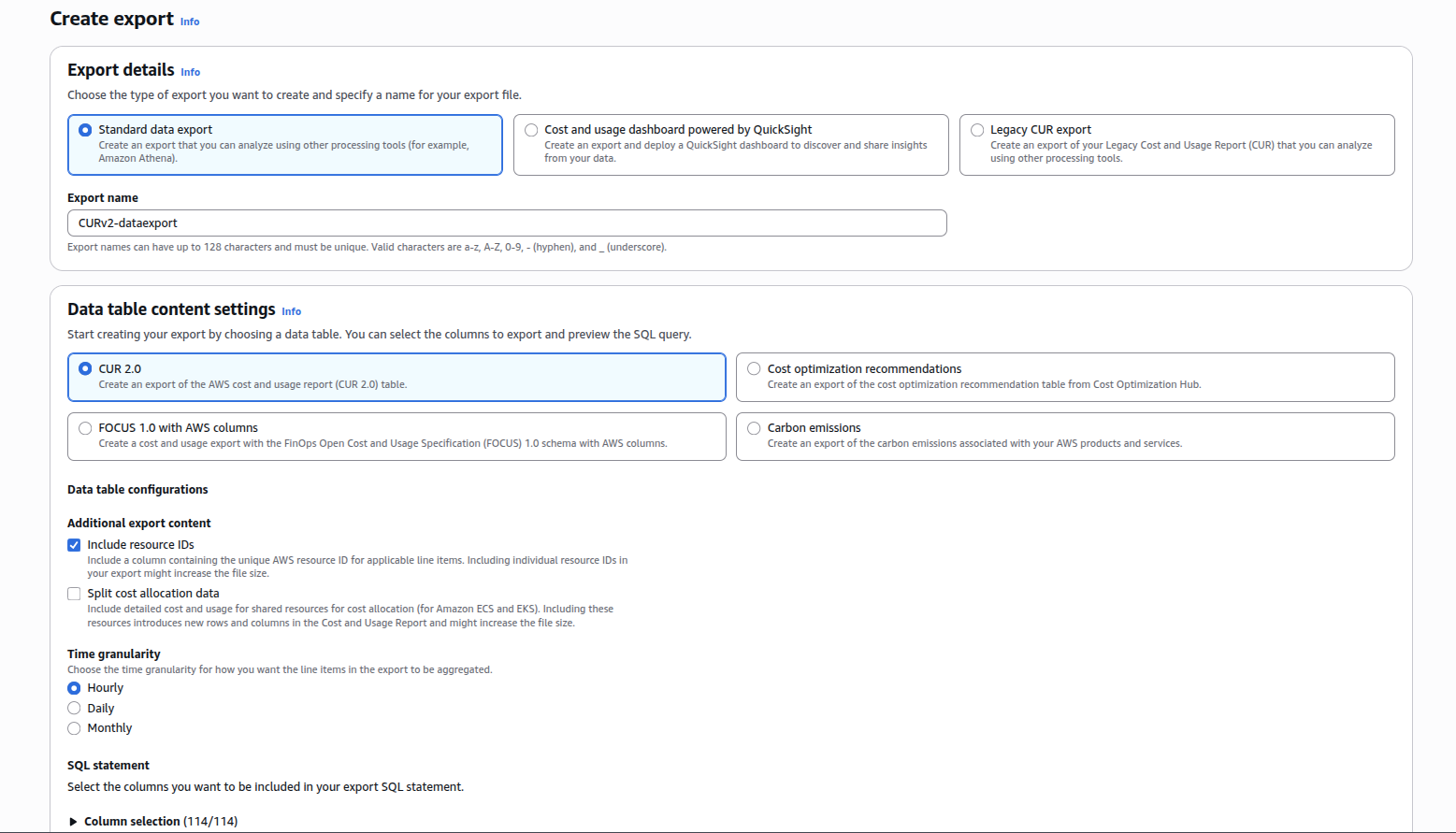

Generate CUR reports

You will need to have CUR reports as inputs. Those are generated via Data Exports and stored on S3 as Parquet files.

The Data Export will automatically populate the CURv2 reports. You can open a support ticket with AWS to get the reports backfilled with historical data.

AWS Console

In the Billing and Cost Management section, go to Cost and Usage Analysis then Data Export. Click on Create:

Give your export a name, click on Include Resource IDs as shown below

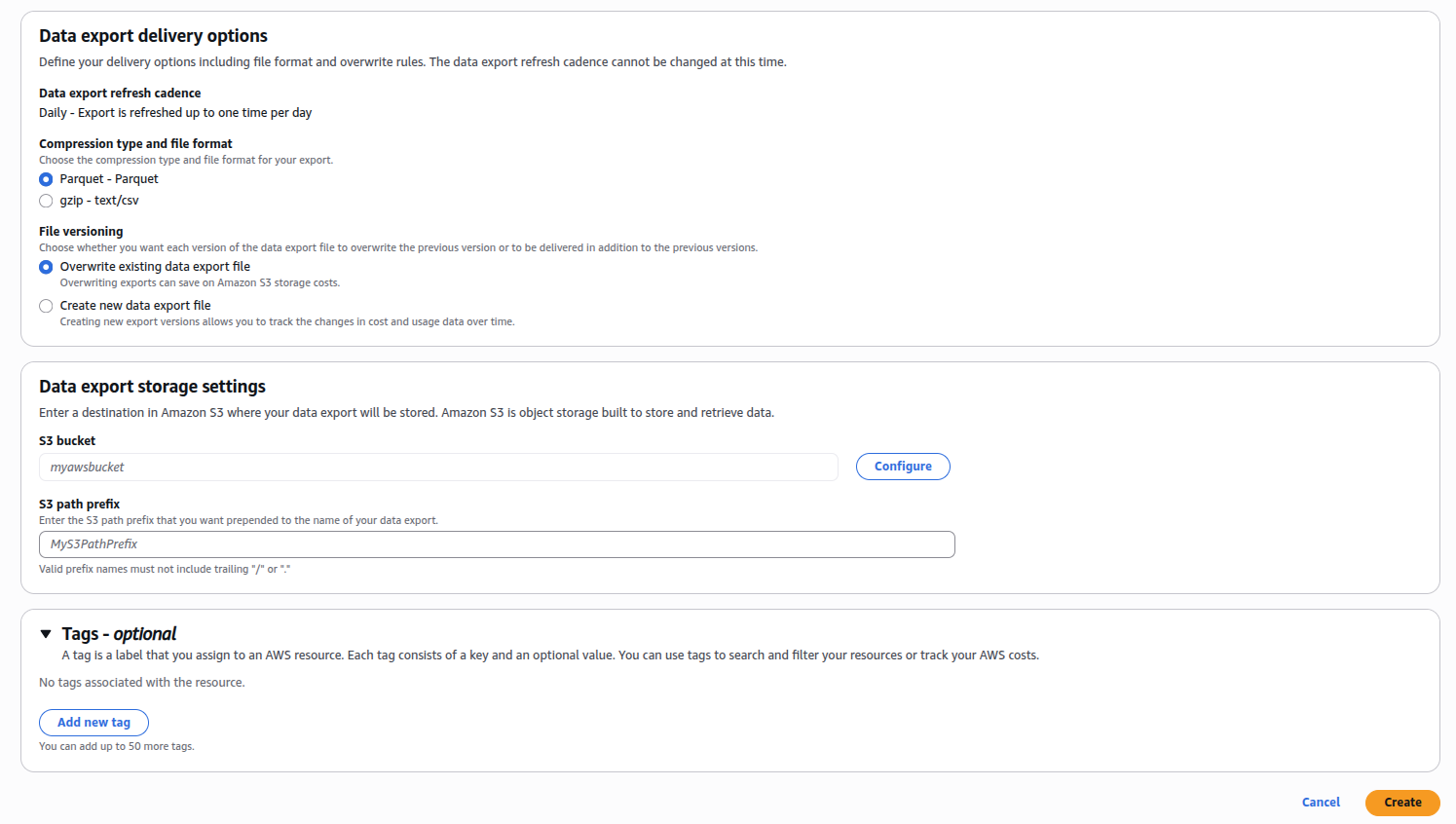

Scroll down to Data export storage settings, select a S3 bucket and a prefix.

If you create a bucket, you should select a region with a low carbon intensity like eu-north-1 (Sweden) or eu-west-3 (France), the emissions related to the storage of the reports will be greatly reduced.

Optionally, add Tags to track the cost and impacts of your GreenOps activities.

Command line

Make sure your AWS keys are exported as environment variables

eval "$(aws configure export-credentials --profile default --format env)"

Copy the script createCUR.sh and run it on the command line. You will be asked to enter a region for the S3 bucket, a bucket name and a prefix.

This should create the bucket where the CUR reports will be stored and configure the Data Export for the CURs.

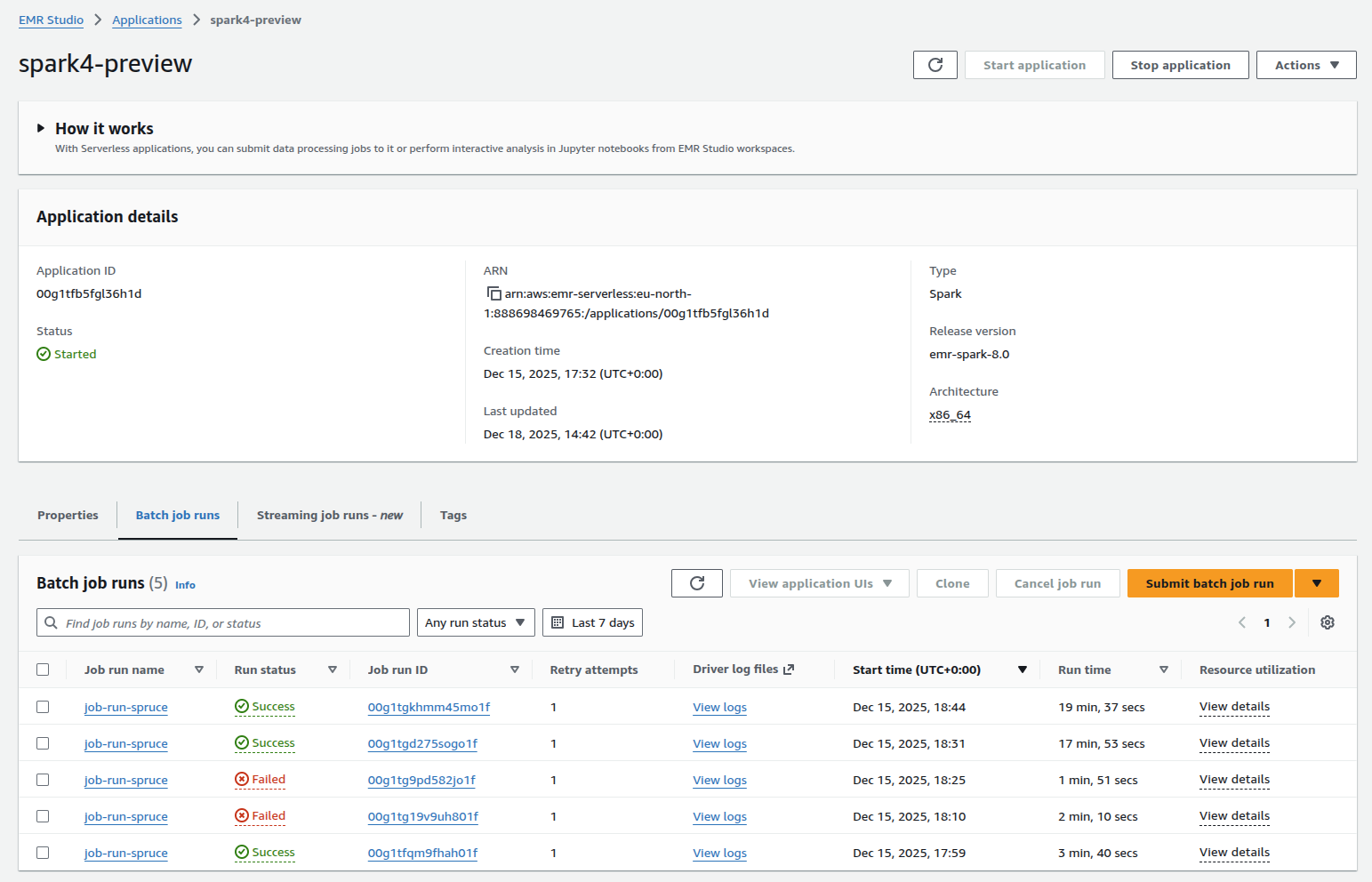

Run SPRUCE on AWS EMR Serverless

One of the benefits of using SPRUCE is that you can enrich usage reports within AWS without having to expose any of your data to an API or external service.

The easiest way to do this is by running it on AWS EMR Serverless. EMR Serverless is a fully managed, on-demand service that lets you run Apache Spark® without provisioning or managing clusters. You simply submit your jobs, and EMR Serverless automatically provisions, scales, and shuts down the required resources. You pay only for the compute and memory used while your jobs run, making it ideal for episodic, variable, or exploratory data processing workloads.

Since AWS CUR reports are stored on S3, it makes perfect them to enrich them straight from there without having to copy them.

EMR is also designed to scale, if your CUR reports are large, this is a good way of enriching them.

EMR-Spark-8 ships with a version of Apache Spark compatible with what SPRUCE uses.

Setup

Follow these instructions to set up EMR Serverless on your AWS account. You will also need to install the AWS CLI and have configured it.

We will assume that you have CUR reports on S3. You will also need to make the SPRUCE jar available to EMR by placing it in a S3 bucket.

You can either compile the JAR from the SPRUCE code or grab the one from the release page.

In the example below, we put it in s3://spruce-jars/spruce-0.7.jar but since that bucket name is now taken, you will have to choose a different one.

Run on EMR

Have a look at the user guide.

From the CLI

aws emr-serverless create-application --type spark --release-label emr-spark-8.0-preview --name spark4-SPRUCE

Note the application ID returned in the output. You can always find it with aws emr-serverless list-applications.

Check that the application has been created with

aws emr-serverless get-application \

--application-id application-id

Next, you launch a job

aws emr-serverless start-job-run \

--application-id application-id \

--execution-role-arn job-role-arn \

--name job-run-name \

--job-driver '{

"sparkSubmit": {

"entryPoint": "s3://spruce-jars/spruce-0.7.jar",

"entryPointArguments": [

"-i", "s3:/INPUT-curs/","-o","s3://spruce-output/"

],

"sparkSubmitParameters": "--conf spark.executor.cores=1 --conf spark.executor.memory=4g --conf spark.driver.cores=1 --conf spark.driver.memory=4g --conf spark.executor.instances=1"

}

}'

where application-id is the one you got when creating the application above.

entryPoint is the location of the SPRUCE jar. The entryPointArguments are where you specify the inputs and outputs of SPRUCE, as explained in the tutorial.

Note the job run ID returned in the output.

Check the results

Depending on the size of your CUR reports, the enrichment will take more or less time. You can check that it has completed with

aws emr-serverless get-job-run \

--application-id application-id \

--job-run-id job-run-id

Look at the tutorial, for examples of how to query the enriched usage reports.

Use the AWS Console

You can of course run EMR using the AWS console. This makes it easier to check the success of a job and access the logs for it.

Read / write to S3

A few additional steps are needed in order to process data from and to AWS S3. For more details, look at the Spark documentation.

Make sure your AWS keys are exported as environment variables

eval "$(aws configure export-credentials --profile default --format env)"

If you want to write the outputs to S3, make sure the target bucket exists

aws s3 mb s3://MY_TARGET_BUCKET

You will need to uncomment the dependency

<artifactId>spark-hadoop-cloud_2.13</artifactId>

in the file pom.xml in order to be able to connect to S3.

With Spark installed in local mode

You need to recompile the jar file with mvn clean package.

then launch Spark in the normal way

spark-submit --class com.digitalpebble.spruce.SparkJob --driver-memory 8g ./target/spruce-*.jar -i s3a://BUCKET_WITH_CURS/PATH/ -o s3a://OUTPUT_BUCKET/PATH/

where BUCKET_WITH_CURS/PATH/ is the bucket name and path of the input CURS and OUTPUT_BUCKET/PATH/ the bucket name and path for the output.

With Docker

After you edit the pom.xml (see above), you need to build a new Docker image

docker build . -t spruce_s3

You need to pass the environment keys and values to the container with -e e.g.

docker run -it --rm --name spruce \

-e AWS_ACCESS_KEY_ID \

-e AWS_SECRET_ACCESS_KEY \

spruce_s3 \

/opt/spark/bin/spark-submit \

--class com.digitalpebble.spruce.SparkJob \

--driver-memory 4g \

--master 'local[*]' \

/usr/local/lib/spruce.jar \

-i s3a://BUCKET_WITH_CURS/PATH/ -o s3a://OUTPUT_BUCKET/PATH/

Modules configuration

The enrichment modules are configured in a file called default-config.json. This file is included in the JAR and looks like this:

{

"modules": [

{

"className": "com.digitalpebble.spruce.modules.RegionExtraction"

},

{

"className": "com.digitalpebble.spruce.modules.ccf.Storage",

"config": {

"hdd_coefficient_tb_h": 0.65,

"ssd_coefficient_tb_h": 1.2

}

},

{

"className": "com.digitalpebble.spruce.modules.ccf.Networking",

"config": {

"network_coefficient": 0.001

}

},

{

"className": "com.digitalpebble.spruce.modules.boavizta.BoaviztAPIstatic"

},

{

"className": "com.digitalpebble.spruce.modules.Serverless",

"config": {

"memory_coefficient_kwh": 0.0000598,

"x86_cpu_coefficient_kwh": 0.0088121875,

"arm_cpu_coefficient_kwh": 0.00191015625

}

},

{

"className": "com.digitalpebble.spruce.modules.ccf.Accelerators",

"config": {

"gpu_utilisation_percent": 50

}

},

{

"className": "com.digitalpebble.spruce.modules.PUE",

"params": {

"default": 1.15

}

},

{

"className": "com.digitalpebble.spruce.modules.electricitymaps.AverageCarbonIntensity"

},

{

"className": "com.digitalpebble.spruce.modules.OperationalEmissions"

}

]

}

This determines which modules are used and in what order but also configures their behaviour. For instance, the default coefficient set for the ccf.Networking module is 0.001 kWh/Gb.

Change the configuration

In order to use a different configuration, for instance to replace a module with an other one, or change their configuration (like the network coefficient above), you simply need to write a json file with your changes and pass it as an argument to the Spark job with ‘-c’.

Configure logging

It can be useful to change the log levels when implementing a new enrichment module. The logging in Spark is handled with

log4j. You need to provide a configuration file and pass it to Spark, a good starting point is to copy the

template file from Spark and save it as e.g. log4j2.properties.

The next step is to set the log level for specific resources, for instance adding the section below

# SPRUCE

logger.spruce.name = com.digitalpebble.spruce

logger.spruce.level = DEBUG

will set the log level to DEBUG for everything in the com.digitalpebble.spruce package. In practice, you would be more specific.

Once the modification is saved, you have two options:

-

Rely on the current location of the file and launch Spark with

spark-submit --conf "spark.driver.extraJavaOptions=-Dlog4j.configurationFile=file:///PATH/log4j2.properties" ...where PATH is where you saved the file. Please note that the path to the file has to be absolute. -

With the SPRUCE code downloaded locally, have the file in

src/main/resources/log4j2.properties, recompile the JAR withmvn clean packageand launch Spark withspark-submit --conf "spark.driver.extraJavaOptions=-Dlog4j.configurationFile=./log4j2.properties" .... In this case, the path is relative.

Either way, the console will display the logs at the level specified. Once you have finished working on the code, don’t forget to remove the log file or comment out the section you added.

Split Impact Allocation Data

SCAD

Split Cost Allocation Data or SCAD is an AWS billing and cost management feature that helps organizations gain fine-grained visibility into how cloud resources are shared and consumed across multiple services, accounts, or workloads.

When enabled, it ensures that shared costs — such as data transfer, EC2 instances, or load balancers — are allocated proportionally among all linked resources or cost allocation tags. This becomes particularly important in containerized and multi-tenant environments such as Kubernetes on AWS.

In Kubernetes, a single EC2 instance, EBS volume, or Elastic Load Balancer can support multiple pods, namespaces, or even different applications. This makes it difficult to understand:

- How much a specific team, application, or namespace costs.

- Which workloads are driving the majority of the cluster’s cloud expenses.

- How to accurately charge back or show back costs to different business units.

Without split allocation, costs appear aggregated at the resource level rather than the usage level, making accurate chargeback or budgeting almost impossible.

Split cost allocation data introduces new usage records and new cost metric columns for each containerized resource ID (that is, ECS task and Kubernetes pod) in AWS CUR. For more information, see Split line item details.

How to Enable Split Cost Allocation Data

When defining the CUR data export, activate the options Split cost allocation data as well as Include resource IDs. See Generate Cost and Usage Reports for more details.

From Cost to Impact allocation

A similar problem happens with the environmental impacts estimated by SPRUCE. The split line items (e.g. K8s pods) have a cost associated with them but the emissions and other environmental impacts are still only associated with the AWS resources.

SPRUCE has a separate Apache Spark job which:

- groups all the line_items by hourly time slot and resourceID

- get the sum of all the impacts for the resources (EC2, volume, network)

- get the sum of the split usage ratios

- allocates the impacts on the splits based on their usage ratios

HOWTO

The call is similar to how you run Spruce

spark-submit --class com.digitalpebble.spruce.SplitJob --driver-memory 8g ./target/spruce-*.jar -i ./enriched_curs -o ./enriched_curs_with_splits

An option -c allows to specify the impact columns to attribute to the splits. By default its value is "operational_energy_kwh, operational_emissions_co2eq_g, embodied_emissions_co2eq_g.

This can be changed to cater for columns generated by services other than SPRUCE, including commercial ones such as GreenPixie or TailPipe.

Contributing to Spruce

============================

Thank you for your intention to contribute to Spruce. As an open-source community, we highly appreciate contributions to our project.

To make the process smooth for the project committers (those who review and accept changes) and contributors (those who propose new changes via pull requests), there are a few rules to follow.

Contribution Guidelines

We use GitHub Issues and Pull Requests for tracking contributions. We expect participants to adhere to the GitHub Community Guidelines (found at https://help.github.com/articles/github-community-guidelines/ ) as well as our Code of Conduct.

Please note that your contributions will be under the ASF v2 license.

Get Involved

The Spruce project is developed by volunteers and is always looking for new contributors to work on all parts of the project. Every contribution is welcome and needed to make it better. A contribution can be anything from a small documentation typo fix to a new component. We especially welcome contributions from first-time users.

GitHub Discussions

Feel free to use GitHub Discussions to ask any questions you might have when planning your first contribution.

Making a Contribution

- Create a new issue on GitHub. Please describe the problem or improvement in the body of the issue. For larger issues, please open a new discussion and describe the problem.

- Next, create a pull request in GitHub.

Contributors who have a history of successful participation are invited to join the project as a committer.